Data-Driven Decisions Start Here

Transform your raw data into actionable intelligence with Pinnacle AI’s Data Engineering solutions.

Data Validation / Quality Checks

Data Transformation

Data Sourcing / Ingestion

Data Storage

Data Orchestration

Monitoring & Maintenance

Data Governance & Security

Turn complexity into clarity.

My Technical Assistant gives every team member—technical or not—the ability to navigate complex systems, retrieve accurate data, and execute technical tasks with ease.

Intelligent help, wherever it’s needed.

Trained on your internal systems and built on a modular, secure architecture, this assistant delivers precise, context-aware responses across workflows, departments, and use cases.

What It Delivers:

Natural language support for technical info, troubleshooting, and documentation

Automated task execution across cloud and on-prem systems

Enterprise-grade security with local deployment options

Modular design for tailored functionality and scalability

Accelerated onboarding with live walkthroughs and guided support

Key Features

-

Create robust environments for exploration, modeling, and insights.

-

Consolidate data from multiple sources into one structured system.

-

Workshops and interviews to define business goals and data needs.

-

Cleanse, enrich, and organize data using ETL pipelines.

-

Build scalable, modern cloud-based data lakes.

-

Ensure compliance, access control, and system integrity.

-

Custom visualizations with filters, drill-downs, and reports.

-

Build user-friendly dashboards and query interfaces.

-

Validate data accuracy, reliability, and performance.

-

Enable teams to maintain and evolve the solution internally.

Pinnacle AI’s Data Engineering Practice

Requirement Gathering: Conduct workshops and interviews to understand business needs, data sources, and analytics requirements.

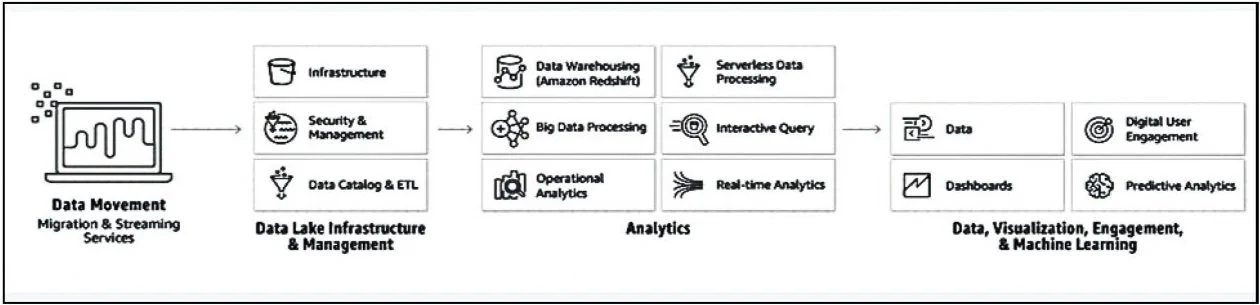

DataLake Design and Implementation: Design and deploy a scalable DataLake infrastructure using appropriate technologies and frameworks.

Data Integration and Ingestion: Develop mechanisms to integrate data from various sources and ingest them into the Data Lake.

Data Transformation and Processing: Implement ETL (Extract, Transform, Load) processes to cleanse, transform, and enrich data in the Data Lake.

Analytics Framework Development: Design and build an analytics framework that supports data exploration, analysis, and visualization.

Self-Service Capabilities: Develop user-friendly interfaces and tools to enable end-users to access, query, and create reports from DataLake.

Interactive Visualization Development: Create interactive visualizations using various chart types, filters, and drill-down capabilities.

Data Governance and Security: Establish data governance policies, access controls, and data security measures to ensure compliance with relevant regulations.

Testing and Quality Assurance: Conduct thorough testing of the DataLake and analytics components to ensure accuracy, performance, and reliability.

Documentation and Training: Prepare comprehensive documentation and conduct training sessions to enable effective usage and maintenance of the implemented solution.